How to Build Agentic AI: A Comprehensive Guide 🧠

January 2, 2025, 12 min read

Most AI today is still playing the assistant role. It answers questions, follows commands, makes decent guesses. Though it doesn’t initiate. It waits. It reacts. It’s smart, sure, but it’s not exactly out here making calls.

Agentic AI is the version designed to take control.

These are systems that don’t just process data. They do so much more. Agentic AI set goals, make decisions, and take action with minimal supervision. They operate like digital agents, not digital tools. It’s the kind of AI that doesn’t just answer your email. It drafts the response, schedules the meeting, and cancels your dentist appointment because it knows you’ll reschedule it anyway.

In this guide, we’re unpacking exactly what Agentic AI is, why it matters, and how to build one from the ground up. I’ll walk you through the architecture, the tech stack, the pitfalls, and of course, the ethical stuff too. You don’t need a PhD to follow along, just curiosity and maybe a stubborn refusal to let the robots be boring.

Time to put on the safety goggles, folks.

What is Agentic AI?

If you think of regular AI as a super helpful intern that is fast, obedient, always waiting for instructions, then Agentic AI is like a team member. Not a regular one but one who shows up with a plan, adjusts when things go sideways, and keeps working even when no one’s watching. It doesn’t just react to data. It has initiative. It’s designed to pursue goals, adapt to changing environments, and act independently (within limits you define, fortunately).

At its core, Agentic AI refers to systems that operate like agents (very informative and clear, right?):

- They observe the world (or at least the data they’re fed),

- Make decisions based on that input,

- And then act—sometimes in creative, even unexpected ways.

They aren’t just your basic predictive model. It’s not just outputting probabilities or classifying emails as spam. Agentic AI does things. It can learn from experience, adjust strategies mid-task, and even negotiate between conflicting objectives. Think autonomous cars rerouting in real time, customer service bots that know when to escalate, or financial AIs that shift strategies based on market mood swings.

The key difference? Autonomy. Not “let it run wild” autonomy but systems built to act with minimal human intervention while still aligning with a broader goal or mission. They’re not just answering questions; they’re taking action. As we have said a million times already. But what is one more time: taking action, autonomy, agents, initiative, etc etc. Get it, yet?

Why Build Agentic AI?

We’ve trained AI to label cats, suggest movies, and autofill emails. Cute. But at some point, you stop wanting a glorified autocomplete and start needing an AI that can actually think ahead. That’s why Agentic AI was born.

Here’s why Agentic AI matters (and why people are scrambling to build it):

Solving Problems

Agentic AI doesn’t just recognize issues, it seizes them. Whether it’s a looming supply chain glitch, a cybersecurity risk, or your customer about to rage-quit support chat, an agentic system doesn’t wait for instructions. It acts. It acts before it even becomes a problem at times.

What If The Scenario Changes?

Markets crash. Customers switch gears. Code breaks. Things in business almost always go wrong in some point. Agentic AI is built to adapt to those, fast. It reevaluates, adjusts the plan, and keeps moving without spiraling into existential crisis (unlike, say, me on Mondays).

It Handles Complexity

We’re talking layered, multi-step processes across environments and domains. Agentic AI thrives in chaos, whether it’s balancing competing KPIs, reacting to new data, or navigating a task with too many moving parts for rule-based bots. It can think over multiple things at the same time before you even get a chance to understand what is wrong. Jelous?

Less Babysitting, More Autonomy

You don’t need to monitor every micro-decision. Give it a goal, set some boundaries, and let it do its thing. This isn’t AI as a pet rather it’s AI as a colleague. It has got what you have in store for her in the bag. Time to relax, pal.

It Works Everywhere

From robotic surgeons to logistics AIs that reroute trucks in real time, from smart tutors to fraud-detecting finance agents, Agentic AI isn’t tied to one field. It’s a mindset, not a niche.

Building Agentic AI means graduating from automation to autonomy. From delegation to collaboration. From “Do what I say” to “Handle it.”

And trust me, you’ll want to be on the right side of that shift.

The Anatomy of Agentic AI

Because of course, you can’t build the brain without understanding the wiring.

Agentic AI does not consist of magic, contrary to popular belief. It’s a system that is modular, layered, and carefully engineered to act like an autonomous agent. If you want to build something that doesn’t just react, but actually decides, adapts, and executes, here are the core ingredients you need to get right.

1. Perception

Your agent needs input, raw data from the world around it. This could be physical (sensors, cameras) or digital (text, APIs, user behavior). It needs to see, read, and listen in whatever domain it operates.

Typical tools: NLP for language, computer vision for images, time-series data for environments that change over time. This is where situational awareness begins.

2. Reasoning

Once it perceives the world, the agent needs to decide what to do about it. This is your logic layer where goals meet data. ‘What were we supposed to do again’ kind of thing.

Depending on complexity, this could mean simple decision trees, probabilistic models, or reinforcement learning. The goal is to evaluate options, weigh outcomes, and choose an action.

3. Memory

Without memory, your agent lives in the moment like a goldfish. And that’s not what you want.

It needs both short-term recall (what just happened) and long-term memory (what’s worked before, what failed miserably). Whether it’s neural memory networks or a structured database of past actions, memory lets the agent improve, personalize, and avoid dumb repetition.

4. Action

This is the part where your agent does something: sends a command, clicks a button, triggers an API, moves a robot, composes a response. Without this layer, all the reasoning in the world is just academic.

Your action system needs to be reliable, responsive, and secure, especially if your agent is operating in high-stakes environments.

5. Learning

Here’s where autonomy gets real. A true agent learns and not just during training, but after deployment. It updates its strategy based on new inputs, successes, failures, and even subtle shifts in context.

This could involve supervised learning, reinforcement learning, or unsupervised pattern detection. The point is: your agent evolves.

6. Ethical Boundaries

AI has power but it needs limits. These systems don’t get ethics “by default.” You need hard-code guardrails and to continuously audit behavior.

That means constraints, accountability measures, and tools like AI fairness testing, explainability frameworks, and bias detection models. Otherwise, you’re building a really smart liability.

Put these together and you’ve got the blueprint for an AI system that can actually function like an agent and not just pose as one. Skip a step and you’re either building something useless, dangerous, or both.

Key Tools and Technologies Needed

Let’s break it down by what each tool actually does for your system.

Data Collection and Preprocessing

Agentic AI lives and dies by its data. It needs constant, structured input to understand the world and respond intelligently.

Tools to use:

Garbage in, garbage out. This is the part where you clean the garbage.

Machine Learning Frameworks

The engine room of any smart agent. These frameworks train your models and help them evolve.

Popular choices:

Pick based on your use case—and how close you want to get to the machine.

Natural Language Processing (NLP)

If your agent needs to understand or generate language (and most do), this is where you’ll want to be.

Libraries worth knowing:

Whether it’s parsing user input or writing an email, NLP is what makes the agent feel human-adjacent.

Simulation and Training Environments

You wouldn’t drop a toddler into a corporate job without practice. Same logic here. Simulations let your agent train without breaking things.

Options:

Infrastructure and Scaling

Training and running Agentic AI at scale takes serious horsepower. Cloud platforms give you compute, storage, and dev tools without melting your laptop.

Top players:

This is your backend brainpower. Get it wrong, and your agent bottlenecks hard.

Ethics, Fairness, and Interpretability

Autonomy without accountability is a lawsuit waiting to happen. These tools help your system behave transparently, fairly, and safely.

Use these early and often:

This is how you make sure your agent doesn’t accidentally discriminate, hallucinate, or wreak havoc on the wrong Slack channel.

Stack these tools strategically, and you’ve got what you need to build something that’s not just smart, but agentic. Next up, we’ll talk process: how to actually piece this all together in the right order without losing your mind.

How To Build Agentic AI Step By Step

Define the Goal

- Get ultra-specific about what your agent should accomplish.

- Define inputs, desired outputs, constraints, and success metrics.

- Example: “Identify and escalate high-risk transactions in real-time” > “Do fraud stuff.”

Gather and Prepare the Data

- Collect high-quality, relevant, and diverse datasets.

- Clean, label, and structure your data, bad input = bad agent.

- Check for bias, duplication, and missing context. You’re feeding this thing so don’t feed it junk.

Choose the Right Algorithms

- Use deep learning for pattern-heavy tasks like vision or language.

- Apply reinforcement learning for environments where actions affect future outcomes.

- Leverage Bayesian models when reasoning under uncertainty.

- Don’t be afraid to go hybrid—real-world systems usually are.

Design the Agent’s Architecture

- Break it into functional layers: perception, reasoning, memory, action, feedback.

- Prioritize modularity so you can update one part without breaking the rest.

- Test in simulation before sending it out into the wild. Seriously.

Train the Agent

- Use controlled environments (simulators, historical data) before going live.

- Monitor learning behavior. If it’s just optimizing for speed and ignoring quality, course-correct.

- Reinforcement learning isn’t a set-it-and-forget-it situation. You’re coaching, not commanding.

Implement Feedback Loops

- Build in mechanisms for reflection and self-improvement.

- Use evaluation metrics, reward adjustments, and correction protocols.

- Track what went wrong and teach the agent how to recover better next time.

Deploy, Monitor, and Adjust

- Start small in a limited environment and strict guardrails.

- Add real-time monitoring, alerts, and fallback systems.

- Keep a human in the loop for critical or high-risk decisions.

- Iterate constantly. The first version will be wrong. That’s the point.

No single checklist can cover every use case, but if you’ve nailed the above, congratulations, you’ve built something that can think, act, and improve without being micromanaged. Just remember: autonomy is a moving target. Your agent’s job is to evolve, and your job is to make sure it evolves in the right direction. Keep testing, keep refining, and most importantly, don’t let it outgrow its ethical leash.

Challenges in Building Agentic AI

This is the part no one brags about in their case study.

Agentic AI sounds impressive and it is but building it is a whole different beast than spinning up a chatbot or slapping an API on a model. The more autonomy you give your system, the more chaos it has to navigate and the more ways it can fail if you’re not careful. Here’s what you’re really up against.

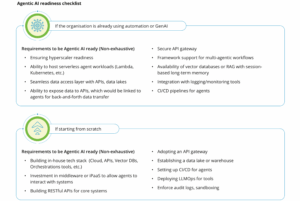

But before all these, there is an even bigger question to answer. “Should you be building Agentic AI?” “Are you ready to implement Agentic AI to your workflow?” These are hard question to answer perhaps, ones that require an uncomfortable confrontation. That is why they are really important. The answer to this question will determine a lot and will eventually save you the headache and the responsibility. So, please, be honest to yourself.

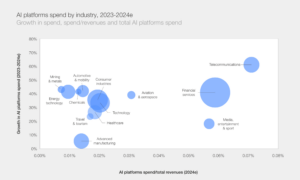

Deloitte stumbles upon this important point in their latest report about Agentic AI.

“Depending on the maturity of their technology ecosystem, organisations can be at varying stages of readiness for Agentic Al adoption. For instance, organisations already using GenAl, automation and Large Language Models (LLMs) typically operate in a multisystem environment with robust data pipelines, orchestration layers and integration frameworks. These organisations often have a preferred LLM embedded into their workflows, supported by automation platforms (RPA and iPaas) and APls that enable seamless interaction across systems.”

It’s Complex (Like, Really Complex)

You’re not just building a model, you’re building a full system that senses, thinks, remembers, acts, and learns. That’s a lot of moving parts to keep in sync. One laggy module can tank the whole thing.

It’s Data-Hungry and Picky

Agentic AI doesn’t just need data but it needs the right data. That means diverse, high-quality, and context-rich inputs. And if your data’s biased or incomplete? Your agent will learn exactly the wrong lessons, but with total confidence.

It’s a Long-Term Investment

Training takes time. Monitoring takes time. Fine-tuning, debugging, retraining? More time. This isn’t a weekend side project. If you want reliability, you need to treat it like infrastructure, not a feature.

Explainability is Still a Work in Progress

Sure, your agent made a decision—but why? Black-box behavior is a liability, especially when you’re working in sensitive domains. Making reasoning transparent is hard, but non-negotiable.

Ethical Alignment is an Ongoing Battle

Your agent may technically follow the rules, but still make decisions that are misaligned with human values. You’ll need to test edge cases, monitor for unintended consequences, and update ethical constraints regularly. This isn’t a one-and-done situation.

Costs Add Up Fast

Training large models, running simulations, spinning up cloud infrastructure and it’s all expensive. And if you’re deploying in real-time environments, expect compute costs to stay high. Don’t go in blind without a budget buffer.

Building Agentic AI means playing on hard mode. It’s ambitious, expensive, and full of unknowns. But if you’re willing to deal with the mess? You’ll be ahead of the curve—while everyone else is still trying to fine-tune their chatbot’s tone.

The Future of Agentic AI

As technologies like quantum computing and neuromorphic chips advance, Agentic AI will become even more powerful and efficient. These systems will revolutionize industries by performing tasks once thought to be exclusively human, such as creative problem-solving and empathetic interactions. As Marvin Minsky of The Society of Mind said:

“Each mind is made of many smaller processes. These we’ll call agents. Each mental agent by itself can only do some simple thing that needs no mind or thought at all. Yet when we join these agents in societies—in certain very special ways-this leads to true intelligence.”

Building Agentic AI is not just about creating autonomous systems, it’s about designing agents that can think, act, and learn in ways that add genuine value to our lives. By focusing on clear goals, robust architecture, and ethical considerations, you can create AI that’s both powerful and responsible.

Feeling confident to take on the challenge? The future of it can be you!

Source: Nvidia